Invited Talk

Session: TP4-2-1

Time: 12:50-13:10

On Intelligent Maritime Navigation System toward e-Navigation

Dr. Ki-Yeol Seo

Korea Research Institute of Ships & Ocean Engineering

According to the development of e-navigation strategy currently being developed by the International Maritime Organization (IMO), studies on the development and implementation of e-Navigation strategy have been performed in the EU and other countries. e-Navigation is a concept that is designed based on the mutual harmony of shore-based support services and vessel navigation system at the request of the user. In this presentation, the intelligent maritime PNT system for the future e-Navigation will be introduced focusing on the four keywords; e-Navigation, PNT, Intelligent system, and the Future. “e-Navigation” as the first keyword, it will introduce international issues and its meaning and also the development status of e-Navigation for each country.

As the second word of the navigation and positioning system, PNT technology and its infrastructure will be described in terms of “Positioning, Navigation, and Timing (PNT)”. In particular, the research and development case of GNSS and R-Mode navigation system will be presented in order to implement the Resilient PNT to the vessel. And also, intelligent technique and situational awareness for application to maritime navigation system, volumetric navigation technology and digital hydrography will be discussed. Finally, a conceptual diagram for the intelligent & interactive maritime navigation system will be presented as an optimal navigation solution that integrates information as described above.

"e-Navigation + PNT + Intelligence = The Future"

The project, "Development of technologies for DGNSS service performance enhancement and Port PNT monitoring"", currently under development by KRISO, will be briefly introduced with regard to the above information. It is a technology to enhance the reliability of maritime and port by providing the user with protection level at user point of view which is determined by navigational error estimation for monitoring port PNT. It will be taken into account of the problems and its solutions for the implementation of e-Navigation.

Keywords: e-Navigation, PNT, Intelligence, GNSS, maritime navigation

Session: TP4-3-1

Time: 15:05-15:45

The Lightest Child-sized Humanoid Robot, CHARLES

Prof. Young-Jae Ryoo

Mokpo National University, Korea

In this invited talk, I would like to introduce the lightest child-sized humanoid robot. And I will share the experience that we designed and developed the humanoid robot, CHARLES : Cognitive Humanoid Autonomous Robot with Learning and Evolutionary System. Also I will show several achievements with CHARLES. The robot, CHARLES performed the dancing show with the K-pop song ‘Gangnam Style’ in the opening ceremony of International Robotic Contest 2012, and won the medals and awards in FIRA(Federation of International Robot-soccer Association) cup 2013, and 2014.

By the way, why we need a light humanoid robot which is as tall as a child? Why we developed the lightest humanoid robot, CHARLES? There are many humanoid robots, ASIMO, HUBO, and PETMAN, etc in research site. However, it is still struggle to teach humanoid robots in education site. Because a full-sized humanoid robot in research site is not affordable for an educational experimental set, but also heavy for student to manage it.

As a better solution, a child-sized humanoid robot of the CHARLES has light weight for easy handle. Also we are going to open the platform so that any students or researchers in the world can get the CAD files to design the mechanism of humanoid robot as well as the source files to program the motion of it.

I had tried to teach a class of humanoid robot to under graduated students and graduated students with lectures in university as well as tutorials in several conferences. The students were interested in the class, and enjoyed to understand humanoid robot technology and design it. To use the open platform of a humanoid robot in education is very effective method.

I’d like to share the experience about it in the invited talk. The invited talk includes the following contents; (1) Introduction of open platform of humanoid robot, CHARLES, (2) Design of mechanism for humanoid robot, (3) Design of electronic hardware for humanoid robot, (4) Software and programming for humanoid robot, and (5) Walking theory and applications.

Keywords: Humanoid robot, CHARLES

Acknowledgements. This research was financially supported by the MEST and NRF through the Human Resource Training Project for Regional Innovation (No. 2012026068), and by the MSIP and KOITA through the Programs to Support Collaborative Research among Industry, Academia and Research Institutes.

Session: TP4-4-1

Time: 17:20-17:40

A Transaction-Sequence Based Recommendation Approach by Splitting Content Consuming Logs

Prof. Jee-Hyong Lee

Sungkyunkwan University, Korea

Due to the huge amount of data and the developments of effective data processing algorithms, recommender systems have become indispensable tools in various domains such as movie, book, news, music and so on. It especially has a big effect on contents business.

However, the main shortcoming of commonly used recommender systems is that they do not consider associations among consumed contents. For example, one person consumed a set of contents but separately each item in different time slots, but the other person successively consumed the items in the set in a time slot. Even they consumed the same content items, the association among the items is different. We may infer that two users are under different context or have different interest. By considering these factors, we can recommend more related items to users.

The existent approaches can be categorized into transaction based approaches and sequence based approaches. Transaction based recommendation approaches deal with contents consuming logs as sets or transactions. The consuming orders or sequences of contents are ignored, which means that some information in logs may be lost. Sequence based recommendation approaches deal with contents consuming logs as sequences of content items. It can utilize most of information included in logs. However, local sequences of a user’s consumed contents are often of little importance and considering those may not useful for the recommendation performance.

We propose a transaction-sequence based recommendation approach which adopts the merit of transaction and sequence based approaches. It splits the original content consuming sequences into cohesive sub-sequences, and treats each sub-sequence as a transaction. If we observe a content consuming log, we can find sub-sequences in which contents are strongly associated with each other. Since users choose or consume contents under a context, such as, preference or purpose, it is very probable that the contents successively chosen by a user are related.

In order to split users’ contents consuming logs into sub-sequence, we build splitting rules. It is assumed that the cohesiveness in content consuming logs is mainly affected by the context in which users consumed contents. We select the features which may reflect the context related with contents, and combine those to build up splitting rules using binary decision trees. We find optimal splitting rules with a genetic programming approach. For finding strongly associated item sets in cohesive sub-sequences and recommending those as the next items, an association rule based approach is adopted.

For evaluating the proposed approaches, many experiments are accomplished from various viewpoints. We evaluate the proposed approach based on two real datasets; Web navigational logs and movie contents consuming logs. The result of experiments supports that the usefulness of the proposed approach for recommendation.

Keywords: Collaborative Filtering, Recommender System, Cohesive Unit, Genetic Programming, Association Rule

Acknowledgements. This research was partly supported by Basic Science Research Program through NRF funded by MOE (NRF-2012R1A1A2008062) and ICT R&D program of MSIP/IITP (10041244, Smart TV 2.0 Software Platform).

Session: TP5-1-1

Time: 8:40-9:20

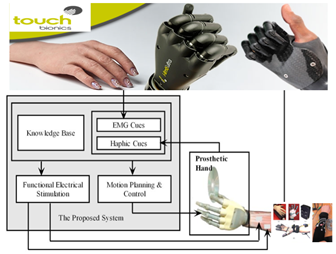

Exploring Human Motion Capabilities to Prostheses: Sensing, Algorithms and Applications

Prof. Honghai Liu

University of Portsmouth, UK

It is an evidently challenging problem of enabling amputees to “experience” human hand skills in that it requires multi-disciplinary efforts to produce an artificial hand with human hand capabilities such as touch, sensing temperature, texture, pressure and friction, etc. This talk will report research progress on prosthetic sensing, algorithms and application in the Intelligent Systems and Biomedical Robotics Group in the field of prosthetic sensing and skill transfer. After a brief review on the state-of-the-art prosthetics, a unified computational framework is presented with an aim at instilling human hand manipulation skills into an artificial prosthesis, considering tradeoffs between pattern recognition accuracy and computational efficiency, then priority is give to sensing related problems underlying the effective solutions to prosthetic manipulation, finally future directions and discussions are invited in the end.

A compact computational account of hand-centered research firstly is introduced, which are principles, methodologies and practical issues behind human hands, robot hands, rehabilitation hands, prosthetic hands and their applications. For more details on recent scientific findings and technologies please refer to [1] including human hand analysis and synthesis, hand motion capture, recognition algorithms and applications, which serves the purpose of how to transfer human hand skills to prosthetic manipulation in a computational context.

Unconstrained human-hand motions that consist grasp motions and in-hand manipulations lead to a fundamental challenge that many algorithms have to face in both theoretical and practical development, mainly due to the complexity and dexterity of the human hand. Secondly, a novel unified fuzzy framework is presented of a set of recognition algorithms: time clustering, fuzzy active axis Gaussian mixture mode, and fuzzy empirical copula, from numerical clustering to data dependence structure in the context of optimally real-time human-hand motion recognition [2]. Time clustering is a fuzzy time-modeling approach that is based on fuzzy clustering and Takagi--Sugeno modeling with a numerical value as output. The fuzzy active axis Gaussian mixture model effectively extract abstract Gaussian pattern to represent components of hand gestures with a fast convergence. A fuzzy empirical copula utilizes the dependence structure among the finger joint angles to recognize the motion type. Thirdly, nonlinear measures based on recurrence plot is investigated as a tool to evaluate the hidden dynamical characteristics of sEMG during four different hand movements. A series of experimental tests in this study show that the dynamical characteristics of sEMG data with recurrence quantification analysis (RQA) can distinguish different hand grasp movements, adaptive neuro-fuzzy inference system (ANFIS) is applied to evaluate the performance of the aforementioned measures to identify the grasp movements. Fuzzy Gaussian Mixture Models (FGMMs) are proposed and employed as a nonlinear classifier to recognise different hand grasps and in-hand manipulations captured from different subjects. Experimental results have confirmed the effectiveness of the proposed methods [3,4].

This talk is concluded with emphases on giving priority to sensing related problems and solutions. It is expected that the talk could report the state of the art and provide profound insights into an in-depth understanding of real-time prosthetic algorithms, human perception-action cycle, and potential hand-centred healthcare solutions.

[1] H. Liu, “Exploring human hand capabilities into embedded multifingered object maniipulation,” IEEE Transactions on Industrial Informatics, 2011, 7(3):389-398

[2] Z. Ju, H. Liu, “A unified fuzzy framework for human hand motion recognition,” IEEE Transaction on Fuzzy Systems, 2011, 19(5):901-913

[3] G. Ouyang, X. Zhu, Z, Ju, H. Liu, “Dynamical characteristics of surface EMG signals of hand grasps via recurrence plot, IEEE Journal of Biomedical and Health Informatics, 2014, 18(1):257-266

[4] Z. Ju, G. Ouyang, M. Wilamowska-Korsak, H. Liu, “Surface EMG based hand manipulation identification via nonlinear feature extraction and classification,” IEEE Sensor Journal, 2013, 13(9):3302-3311.

Session: TP5-3-1

Time: 15:35-16:15

Elucidation of Brain Functions by Dipole Estimation of EEGs and its Applications to Brain Computer Interface

Prof. Takahiro Yamanoi

Hokkai-Gakuen University, Japan

According to research on the human brain, the primary process of visual stimulus is first processed on V1 in the occipital lobe. In the early stage, a stimulus from the right visual field is processed in the left hemisphere and a stimulus from the left visual field is processed in the right hemisphere. Then the process goes to the parietal associative area. Higher order processes of the brain thereafter have their laterality. For instance, 99% of right-handed person and 70% of left-handed person have their language area in the left hemisphere, in the Wernicke's area and Broca's area. By presenting several visual stimuli to the subjects, the present author and his collaborators measured electroencephalograms (EEGs). Then the EEGs were summed and averaged according to the type of stimuli and the subjects, event related potentials (ERPs) were obtained. ERPs peaks were detected and analyzed by equivalent current dipole source localization (ECDL) method at these latencies using the three-dipole model. The present author explains the ECDL method, which is implemented in the software SynaCenterPro (NEC Corporation). The software detects source of ERPs, and by use of subjects MRI image of brain, it enables to superimpose the source on the image of each subject. And the lecture explains its application to some human visual recognition process.

While the author has been measuring electroencephalograms (EEGs) from subjects on watching images of arrows; ↑,↓,→, and ←, he found images with opposite meaning showed opposite potentials. Then he thought that they can help as a switch, so he had applied the findings to the brain computer interface (BCI).

The authors group has measured the EEGs on recalling ten types of images presented on CRT. Each presented image consisted of ten types of line drawings of body part, tetrapod, home appliance, and fruits. The canonical discriminant analysis was applied to these single trial EEGs. Four channels of EEGs at the right frontal and the temporal area were used in discrimination. They were Fp2, F4, C4 and F8 according to the international 10-20 system. Sampling EEGs were taken from 400ms to 900ms at 25ms intervals. Also, data were resampled -1ms and -2ms backward. The number of variates is twenty one by four; so the data were eighty four dimensional vectors and number of the data was three hundred and sixty. Results of the canonical discriminant analysis by use of so called jack knife statistical method were almost 80 % in case of ten types. These results were applied to control a micro robot e-puck.

Session: TP6-1-1

Time: 9:55-10:35

Addressing class imbalance in pattern classification problems

Prof. Gerald Schaefer

Loughborough University, UK

Pattern classification problems occur in a variety of domains and hence well performing classification algorithms are highly sought after. Typically, a classifier is optimised based on some training data and thus effectively learns from experience. One challenge that occurs for certain classification problems is that the training data is imbalanced, that is that there are (many) more training patterns available for some classes than for others. Since classifiers are conventionally tuned based on overall classification performance, accuracy for the minority classes will suffer even though it is often these classes that are of higher interest. In my talk, I will present strategies for successfully addressing class imbalance in pattern classification tasks. In particular, I will focus on such approaches in the context of ensemble classifiers which employ more than one predictor in order to yield improved and more robust classification performance.

Session: TP6-2-1

Time: 11:10-11:50

Challenges and Constraints on Action Analysis

Prof. Md. Atiqur Rahman Ahad

University of Dhaka, Bangladesh

What constitutes an action or activity is difficult to define, as there is no clear distinctive nomenclature on this [1-2]. However, an action refers to a simple, atomic movement performed by a single person; whereas an activity denotes a more complex scenario that involves a group of people [3]. Action/activity analysis, recognition, understanding from video sequences have various applications in HCI, man-machine interaction, biomechanics, robotics, surveillance, games, etc.

Human action analysis is a challenging problem due to large variations in human motion and appearance, camera viewpoint and settings [4]. Some important but common motion recognition problems remain unsolved properly, even though a number of good approaches are proposed and evaluated.

The challenges are manifold. One important area is stereo vision and stereo reconstruction (especially to understand pedestrian’s activity analysis). For action recognition in complex scenes, dimensional variations matter a lot [1]. Few diverse areas are - static vs. dynamic scenes; multi-objects’ identification or recognition; analyzing the context of scene; goal-directed behavior analysis. These issues can be handled for action understanding. In order to understand any goal-directed behavior, we need to analyze the main context of the scenes apart from just recognizing objects. It is important to relate the objects with action verbs. It is also necessary to consider action-context, which defines an action based on the various actions or activities around the person of interest in a scene having multiple subjects. So far, we are dealing with familiar actions and activities. However, there are high demands for understanding various unfamiliar activities, especially for video surveillance-related applications. It is required to know or predict what is going to happen next based on the current understanding of the actions.

Understanding scene and its contexts are crucial to understand an action. We need some new and challenging datasets for action or activity analysis. We need to adopt diversity and dimensions to develop new datasets.

There are various other challenging aspects and some of these are very difficult to address based on the present progresses on action understanding; hence, these issues require time. The better and the robust approaches we have, the more realistic wider applications we can address in future.

Reference:

1. Md. Atiqur Rahman Ahad, “Computer Vision and Action Recognition: A Guide for Image Processing and Computer Vision Community for Action Understanding”, Atlantis / Springer, 2011.

2. Md. Atiqur Rahman Ahad, “Motion History Images for Action Recognition and Understanding”, Springer, 2012.

3. T. Lan, Y. Wang, W. Yang, D: Mori, “Beyond Actions: Discriminative Models for Contextual group activities”, Neural Information Processing Systems (NIPS).

4. R. Poppe, “Vision-based human motion analysis: an overview” Computer Vision and Image Understanding 2007, 108(1-2):4-18.

5. Md. Atiqur Rahman Ahad, J Tan, H Kim, S Ishikawa, “Motion History Image: Its Variants and Applications”, Machine Vision and Applications, Vol. 23, No. 2, pp. 255-281, 2012.